When I was a kid, one of my favorite authors was Isaac Asimov. He is most famous for his science fiction stories about robotics and machines that could think for themselves.

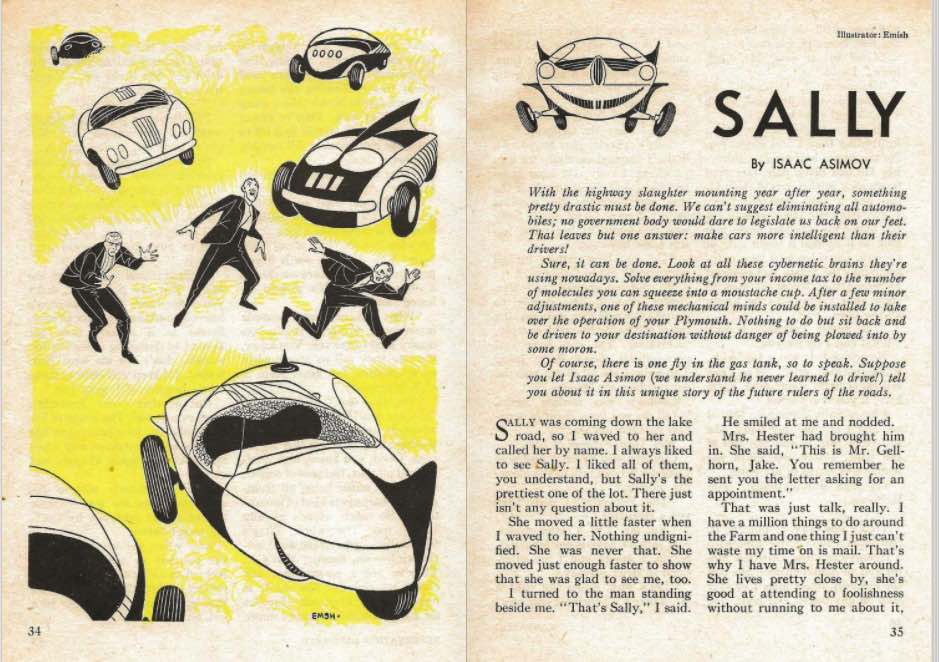

I still clearly remember one of his stories that still haunts me to this day. It was titled “Sally” and was published way back in 1953.

Thanks to the Archive.org, you can read the entire story here.

It’s set in the year 2057 and cars no longer require humans to drive them. They have been fitted with “positronic brains”, that allow them to be self-aware and can think and act on their own without human intervention.

The main character of the story, Jake, runs a sort of retirement home where he takes care of a group of sentient cars that have lived past their prime.

An unscrupulous con man by the name of Gellhorn, learns of this retirement home for these expensive sentient cars and wants to steal one for himself to sell on the black market.

Gellhorn manages to steal one of the most prized sentient cars on the lot, who happens to be named Sally.

But Gellhorn doesn’t realize just how independent cars like Sally are. They can think for themselves just like humans. And even more startling, they even have human emotions. Like anger.

When Gellhorn manages to steal Sally from the car retirement lot, the other sentient cars come to Sally’s rescue. And in a fit of revenge, we learn at the end of the story that Gellhorn is found dead in a ditch with multiple tire tracks all over him.

Jake suddenly has a frightening thought about what all the millions of sentient cars in the world would do if they all decided that humans were their enemy.

That story, written way back in the 1950s, clearly belonged in the realm of science fiction. Computers were BARELY out of their “toddler” stage of evolution back in the 50s. The very notion of self-driving cars must have been thought laughably impossible.

But fast forward some 60+ years since the time of that story, and we are on the cusp of self-driving car technology. Companies like Tesla and Uber are already testing autopilot technology that allows their vehicles to drive by themselves, though recent accidents have resulted in increased scrutiny.

Commercial and military jet planes have had this technology for many years and we’re now getting close to seeing this technology implemented in our everyday vehicles.

It brings up a rather interesting dilemma that, sooner or later, will need to be addressed in a decisive manner.

The Autonomous Vehicle ‘Trolley Problem’

It’s been famously referred to as the “trolley problem” — a thought experiment that explores the notion of making the right judgment call in an emergency situation.

The thought experiment goes something like this.

Imagine a runaway trolley car that is barreling down the tracks at a very dangerous and fatal speed. You happen to be standing a ways in front of the oncoming trolley next to a lever that can divert it onto two different tracks.

One of the tracks has five people standing on the tracks, unaware of the oncoming trolley car, and the other has one person standing on the track.

If you were the person next to that lever, and you only had a few seconds to divert the trolley car, what would be the moral and ethical decision you would take? Would you pull the lever to divert the trolley to the track with one person? Or the other one with five?

What if the one person was a blind man and the five people were toddlers? You can plug in as many different kinds of scenarios as you can possibly think of into this thought experiment, but the outcome of your decision is the important result of this thought experiment.

Now imagine replacing the person in front of the lever with a machine or computer that needs to make the same decision? What will the machine or computer decide and what rationale and thought process goes into the final decision to divert the car?

Not Just a Thought Experiment

If you haven’t watched the movie, “I, Robot” starring Will Smith, I highly recommend you do.

It’s based on other classic science fiction stories by Asimov about sentient robots.

Will Smith plays Detective Spooner, a Chicago homicide detective in the year 2053, when robots have become advanced enough to act as personal servants and skillful enough to handle blue collar jobs like sanitary disposal and delivery workers.

All robots are guided by the three laws of robotics which state the following:

- First Law – A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- Second Law – A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- Third Law – A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Asimov had a lot of room to play around with these three laws of robotics in his stories. He explored the fact that there were lots of gray areas where robots had difficulty interpreting the three laws to determine the right course of action.

Detective Spooner has a particular past that makes him distrust all robots. He was involved in a car accident where his car and another car crash into a river. The other car has a little girl who’s trapped in the car as it steadily sinks to the bottom of the river.

Suddenly a robot who happens to see the entire incident, spots Detective Spooner and rescues him. But Detective Spooner doesn’t want to be saved. He desperately tries to tell the robot to save the little girl instead, but the robot uses whatever logic and algorithms it was programmed to, and determines Detective Spooner was the most logical person to save.

That robot’s decision to save Detective Spooner’s life over the little girl has haunted him ever since and made him distrust and fear robots. In an indirect way, he reveals to another person why… a human factors in emotion and empathy when making decisions while machines and robots do not.

This goes to the heart of my premise that we are soon reaching a point where computer programmers will have to think about things beyond just technical architecture and design.

Programmers will have to begin thinking about concepts that have traditionally never been considered before.

We are hurtling towards a near future where cars will begin to drive by themselves without human intervention.

If we transfer that thought experiment about the trolley car to an autonomous car, what would happen if a self-driving car had no choice but to choose between crashing into a bus full of children versus a car filled with close friends and acquaintances which the passenger of the autonomous car intimately knows?

What is the “right and moral” choice an autonomous car needs to make?

I think we’re going to be seeing a new wave of legislation and legal enforcement around how artificial intelligence needs to behave.

In fact, I could totally see Asimov’s famous three laws of robotics being codified into a sort of AI “ten commandments” that all artificially intelligent machines and computers must abide by.

It brings up a whole world of questions and unknowns… who is liable when an AI machine needs to make a life or death decision? Will there be whole new government agencies responsible for enforcement of these AI laws?

Will computer science curriculums in the future have to introduce required courses in philosophy, humanities, and other liberal arts courses? Or psychology and sociology? Perhaps not so outrageous.

I have no doubt it will usher in a whole new era where technology and morality will have to intersect.