Ask any software developer what they detest the most about brownfield software projects, (projects that they inherited from a previous developer or set of developers, and not originally designed by the person inheriting the project), and it’s a safe bet they’ll say two things about the codebase.

- It’s hard to read and understand

- It’s overly complicated and over-engineered

I’ve created a sort of software complexity “blind taste test” for myself, whenever I look at a particular software project or piece of computer code.

Every software application has an entrance point, much like the entrance to a building or a house. It’s where an application begins to kickstart itself to life, much like turning the ignition on your car.

It’s important to know where this entry point is, because a software developer who needs to get to up to speed with an unfamiliar code base, can get a 30,000-foot bird’s eye view of the application as a whole.

At the most basic level, every single software application is nothing but a set of computer instructions. Much like a set of instructions to make a sandwich.

- Get two slices of bread

- Put lettuce on one slice

- Put tomato on other slice

- Add cheese and lunchmeat to slice

- Close slices and sandwich filling together

- Bon appetit!

Step 1 initiates the process of making a sandwich and step 6 defines the completion (yummy sandwich inside your tummy).

At its basic core, a software application is much like this set of instructions to make a sandwich.

It’s easy to tell where the set of instructions starts, and where it ends.

Ideally, every software application needs to be structured in the same, straightforward and logical way.

Well constructed software projects just LOOK RIGHT.

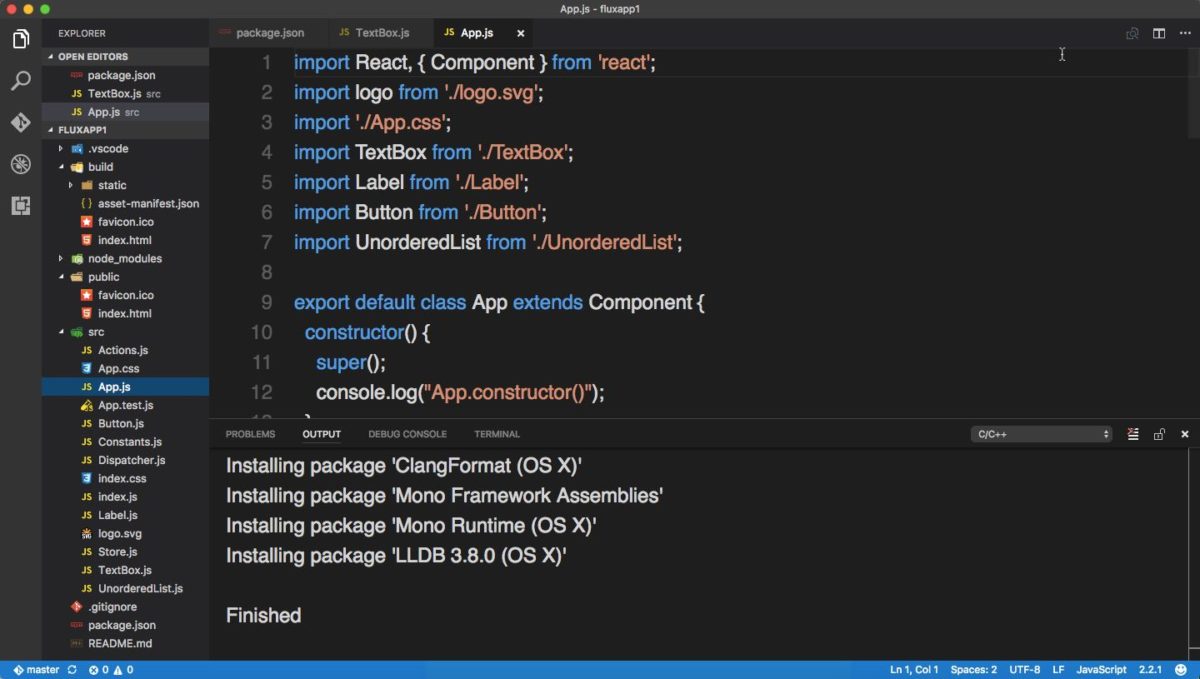

Most software development IDEs and related tools allow you to load in all the code files that comprise an entire project, into one place.

A well-written and thought out software project just looks right inside a code editor (see above), even at a glance.

This is my personal “complexity blind taste test” that I use on any new codebase I have to try to understand.

Sadly, this doesn’t seem to happen very often on my watch … << cue sad trombone music >>

Here’s What Really Happens

What usually happens is I start examining the codebase and I can’t make heads or tails of where the entry point of the application is.

Not to mention the project codebase seems to use every 3rd party library and framework on the planet!

Oh, and let’s not forget liberal use of Inversion of Control containers (IoC), design patterns, dependency injection, n-tier architecture, web services, micro-services, service locators, unit of work patterns and object relation mappers slathered all through the project.

Don’t get me wrong. All these things I just mentioned are useful, no ifs, ands or buts about it …. AT THE RIGHT TIME.

Ha! Gotcha!

Many times, I see software projects that are full of many of these design patterns and architectural patterns, even when I see no logical reason why.

Take the concept of Inversion of Control/Dependency Injection frameworks and containers.

I won’t go into the nitty-gritty details of what IoC and DI frameworks do. But what they help you do is to help isolate places in your codebase where you think the underlying requirements may need to change over time.

For instance, let’s suppose your application uses an Oracle database to persist any and all data your application needs to use.

Then let’s suppose your company’s Chief Technology Officer issues a new mandate to all software IT staff, that Oracle will no longer be allowed for software application development and that all existing applications AND new applications must use Microsoft’s flagship database product, SQL Server.

Sure, this will cause a lot of groaning and grumbling amongst the IT staff, but orders are orders, and each software development team must go through their respective projects and code and figure out how to swap out any code dependencies from Oracle and use SQL Server instead.

It ends up involving a lot of ripping out of existing code, replacing it with new code, retesting EVERYTHING to ensure the code changes and swapping haven’t caused any new bugs or defects in the application, and then praying everything works at the end of the day.

By implementing what is known as Dependency Injection and Inversion of Control software design patterns, you can make the task of swapping out this database related code much easier to implement and maintain.

These patterns help to ISOLATE places in your application which can change over time, such as swapping out one database for another. And once IoC and Dependency Injection are in place, the next time another occasion arises where you need to swap out the database again …. say the CTO reads about how totally awesome NoSQL databases are, so he issues a new mandate for all IT teams to swap out SQL Server for MongoDB … your codebase is configured in a way where it’s going to be much easier to swap out.

Sounds good, right?

So why does it sound like I’m just about to get back on my soapbox and preach about the dangers of IoC and Dependency Injection?

Uh, yeah, because I am.

The thing to remember is that all software development is about BENEFITS and TRADEOFFS.

And usually, something that can be considered a BENEFIT will also have attached TRADEOFFS associated with it.

Dependency Injection and Inversion of Control patterns are no different.

Yes, they solve the problem of making it easier to swap out code, but as the late and great science fiction writer, Robert A. Heinlein was fond of saying, “TANSTAAFL”, short for There Ain’t No Such Thing As A Free Lunch.

The price to pay for implementing IoC and DI in your codebase is obfuscation. If you do a before and after comparison of what code looks like when IoC/DI is and isn’t applied to the same codebase, even non-software programmers will be quick to notice that the code WITH IoC/DI patterns, is much harder to comprehend and read.

Which goes back to the original point about why so many software developers find brownfield projects and inherited codebases overcomplicated, hard to read and over-engineered.

[bctt tweet=”Why are so many brownfield projects and inherited codebases overcomplicated, hard to read, and over-engineered?” username=”profocustech”]More often than not, at least in my observation, I don’t think enough of these developers are asking the most important question when deciding how to implement a specific feature of an application in a certain way.

What’s The Tradeoff?

It’s all about making choices when developing software. What technical stack to use. What programming language. What frameworks and libraries. And a million other decisions about what goes into the architecture and implementation of an application.

Yet too many times, we software developers end up implementing software in a certain way because it’s the way it was done before.

Well, what’s so wrong with that…if it was done before, doesn’t that imply there must have been a good reason?

Yet, amazingly, I find out this isn’t the case. What I eventually learn, when I dig deeper behind the original design decisions behind a certain piece of functionality in a codebase, is no one REMEMBERS what the original reason was.

Other times, the original software developer(s) have the mentality of “future proofing” their code.

Let’s go back to the example of the changing database scenario and using IoC and Dependency Injection to help deal with the changes.

Some developers will implement IoC/DI into a codebase to FUTURE PROOF their code. In other words, in their mind, they’ll assume that it’s worth the cost of harder to read code and the extra time and effort it will take to implement IoC/DI because no matter what, their code is now future-proofed forever to handle future database change.

Here’s the problem with that line of thinking …. what happens if the underlying database ends up never having to be swapped out and changed?

You’ve just implemented something for a non-existent reason.

This kind of anti-pattern is commonly referred to as YAGNI, or You Ain’t Going to Need It!

I can’t fault software developers entirely for falling into this trap. I’m certainly not innocent …. I’m as guilty of the YAGNI mentality as the next software developer. And it’s constantly drilled into our heads that these kinds of design patterns and ways of building software are a GOOD THING.

Yes, they certainly CAN be a good thing, when you apply it at the RIGHT TIME and the RIGHT PLACE.

But I’ve been trying to get myself into the habit of constantly asking myself what costs and tradeoffs are involved when making any sort of design or architectural decision when I’m developing my software applications.

It’s not easy, I assure you. We computer programmers are easily attracted to the latest and shiniest software library, framework, or new way of thinking.

I remember when XML was the digital messiah that was supposed to solve all of our cross-platform, universal data format/exchange needs.

That is, until JSON came out and greatly simplified how to deliver better cross-platform and universal data formats.

The same with SOAP and WSDL web services, which was supposed to be the end all, be all of web service technology.

Until REST and microservices became the new hot thing.

The list goes on and on.

Remember folks, only YOU can prevent over engineered and hard to read code.

Get your finger off that mouse and stop downloading that latest 3rd party framework! That’s it, slowly …

Repeat after me, You Ain’t Gonna Need It … I’m deadly serious.