I’ve been an Apple fan since my elementary school years when my school bought their first shiny new 8-bit Apple II personal computer and plunked it next to the librarian’s desk.

This love of Apple gizmos stayed with me through college and throughout my adult career as a professional software developer. Mac desktop computers, laptops, and more recently, iPads and iPhones.

The latest version of Apple’s iOS operating system, which powers all of Apple’s mobile devices such as the iPhone, iPad, Apple TV, Apple Watch and other core products, has undergone a radical change in iOS version 11.

It really didn’t dawn on me how impactful this change would be until I fired up a few of my old apps and games which I purchased back in 2008.

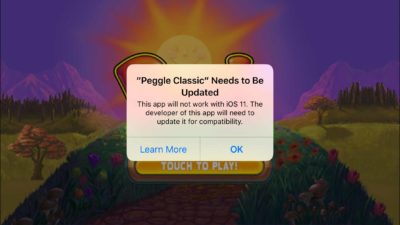

When I launched them from my iPhone, I immediately saw this warning message pop up on my screen, warning me that if I upgrade to iOS 11, this game, along with many of my older apps and games that haven’t been updated for the upcoming iOS 11, will no longer work under that new version of iOS.

For those of you unfamiliar with the underlying reason why the new version of iOS invalidated many of these older apps and games, it’s because iOS no longer allows 32-bit applications to run.

It actually shouldn’t come as a surprise to anyone who uses Apple’s iPhone products. iOS and the iPhone hardware have been fully 64-bit since the days of the iPhone 5s.

But Apple knew that the mass adoption of 64-bit applications wouldn’t happen overnight. Heck, if Rome wasn’t built in a day, it stands to reason Apple shouldn’t expect everyone to hop on the 64-bit bandwagon either.

But what exactly is all this business with “32-bit” and “64-bit” apps? And more importantly, why did Apple make this momentous decision to deprecate and invalidate all 32-bit apps for anyone who decided to upgrade to iOS 11?

What’s The Difference Between 32 and 64-Bit Anyway?

The way marketers and advertisers use the term, it sounds a bit like a measurement of power, in the same way car makers use the term “horsepower” to measure how powerful vehicles are.

And in an odd sort of way, it’s not a bad analogy. The number of “bits” a computer possesses is indeed a bit like describing the horsepower of a car.

But analogies only go so far. To really understand what this 32-bit or 64-bit business is all about, we must delve into the concept of what a “bit” actually is.

A bit (short for binary digit) is the smallest unit of data which every computer device on the planet, from the dawn of computers to the latest bleeding edge devices we have today, can process.

The reason why it’s referred to as a BINARY digit is because at its very core, computers can really only understand two concepts … 1s and 0s.

1s actually represent an “on” state, and 0s represent an “off” state.

The actual electrical voltage that powers a computer, is what is actually responsible for getting all these tiny little switches stored inside a computer’s digital “brain” into either the 1 (on) or 0 (off) state. (Am I the only one who thinks about the movie, “Tron”, whenever I think about all this digital wizardry happening down at the lowest level of a computer??)

A bit, whether it’s ON or OFF, by itself, doesn’t really do much.

But when you start chaining bits together is when a computer starts becoming useful.

So if you chain 8 bits together, you get what is referred to as a BYTE. If you chain 1000 bytes together, you get what’s referred to as a KILOBYTE. If you continue increasing these total bytes by the power of 10, you’ll probably start recognizing the words “megabyte (1000 kilobytes)”, “gigabyte (1000 megabytes)”, “terabyte (1000 gigabytes)”, etc …

But no matter how big these numbers get, the CPU (which is the ‘brain’ of the computer) ultimately breaks down these gigantic numbers down to 1s and 0s.

So what exactly is contained within these bits, bytes, kilobytes, etc?

Computer instructions.

Things like adding two numbers together. Or multiplying two number together. And a whole other slew of useful things that computers are really good at, which essentially boils down to calculating numbers at an incredibly fast, superhuman speed.

The CPU (central processing unit) computer chip of a computer, is the ‘brains’ of the computer. Think of it as the short order cook at a cafe, waiting for customer orders to grill up hamburgers, or make pancakes or whatever. And you can think of each of those menu items on the customer order, like these digital computer instructions contained within a byte, kilobyte, megabyte, etc.

The earliest generation personal computers could only process a limited number of these computer instructions per second.

When I was a kid in the 1970s, computer chips could only process 8 bits (1 byte) of computer instructions at a time, which is why they were referred to as “8-bit computers”.

If we go back to the short order cook analogy, you can think of a single food item on the customer order as an 8-bit instruction. So the short order cook (the computer CPU) would only be able to grill a single food item, like a hamburger, before he could begin cooking something else.

But as with anything else in technology, computers quickly began to be able to process more information in the same amount of time.

8-bit computers were eventually replaced by 16-bit computers, which could process TWICE as many computer instructions as 8-bit computers. So going back to the short order cook example, instead of grilling a single hamburger on the grill and waiting for that to finish before he could begin grilling up something else, the short order cook could actually work on grilling TWO hamburger orders in the same amount of time he was formerly only able to work on one.

This is a good thing because now computers can process more computer instructions in the same amount of time and accomplish more tasks.

Eventually, 16-bit computers were replaced by 32-bit computers and that meant the CPU could handle even more computer instructions in the same amount of time.

So where do all these actual computer instructions originally come from? Well, certainly not from the CPU. The CPU has no sort of storage capability. As soon as you turn off a computer, all of those computer instructions that may have been whizzing around and getting processed by the CPU chip, vanish in a poof, as soon as the electrical power flowing through a computer is suddenly cut off.

So you need a sort of “warehouse” where a CPU can fetch all those computer instructions from. This warehouse in a computer, are the memory chips. And the more memory chips a computer possesses, the more computer instructions that a CPU can fetch from memory and process on the CPU chip.

If you want to permanently store computer instructions even after a computer is turned off, you need a permanent storage mechanism, like a hard drive, to store all those computer instructions.

So when you first turn on a computer, a computer must be able to fetch all the computer instructions from a hard drive, transfer those instructions from the hard drive to the memory chips, and then once in memory, can a CPU then fetch those instructions from the memory chip to the actual CPU fetch.

Interestingly enough, this ability for a computer CPU chip to fetch data from hard disk, to memory chips and then to the CPU where the actual processing of the computer instructions happens, is referred to as a computer “bus”, which makes sense.

The bus is responsible for ferrying all this digital information around so that it either ends up traveling to the CPU or from the CPU, depending on what exactly you’re trying to accomplish.

Whenever I think of a computer bus, I can’t help but think of a school bus. A school bus is much more efficient for ferrying students to school, than an individual car. Even if that car is a super fast Ferrari, because a Ferrari, while much faster than a bus, can only ferry a few passengers at any one time. The school bus, while slower than the Ferrari, can ultimately fetch many more students to school (the CPU) in a single trip (or computer fetch).

And when you increase the clock speed of the CPU as well as the “wideness” of the CPU (8 bit, 16 bit, 32 bit, 64 bit, etc.), not only are you processing many more computer instructions per second, the CPU is also able to finish processing all those computer instructions sooner, when the CPU is running at a faster clock speed, very much like a Ferrari will reach the finish line in a race must faster than a Volkswagen bus.

So now that we have a basic understanding of the underlying architecture of CPUs and how they fetch and process computer instructions, we can go back to the original warning message my iPhone popped up when I tried launching a few of my old games and apps.

Why Apple Ditched 32-Bit Apps

When the iPhone first arrived on the scene in 2007, the CPU was a 32-bit processor … in any given CPU computer clock cycle, it could fetch and process computer instructions that were 32 bits “wide”.

But in 2013, Apple introduced their first 64-bit CPU into their iPhone 5s model. And ever since, all iPhones and iPads have 64-bit CPU chips that can process twice as many computer instructions per clock cycle as their former 32-bit CPU chips.

But Apple no longer wants to support apps that were originally designed for their 32-bit CPUs, as they see no reason to continue supporting that older 32-bit computer chip architecture.

So if I upgrade my latest iPhone to iOS version 11, iOS will no longer allow any apps that were designed for that 32-bit CPU to run. The original designers of any 32-bit apps will have to refactor these apps to work under the 64-bit CPU architecture, or else face extinction.

As you can imagine, it’s already caused grumblings and complaints in the Apple community, but hey, that’s the march of progress… especially in the world of technology, NOTHING lasts forever.

But man, I really loved that game.

Would it help if I camped outside the house of the original game designer until he rewrites his app as 64-bit?

You talked me into it! Wait… do I hear police sirens?